We Have Expressions at Home

The most common operation you’ll perform on tabular data is the selection and transformation of a single column. In pandas, this usually starts with Python’s __getitem__ syntax (df[...]; the square brackets).

This interface is simple and familiar. You can slice, select, and modify with just a few keystrokes, and for many workflows, that’s more than enough. But as soon as your transformations start stacking up, especially when you want to keep your logic tidy and readable, this approach starts to feel a little clumsy.

Over the years, pandas has evolved to support a more expressive style of programming, namely method chaining, that can help avoid the boilerplate and cognitive clutter of intermediate variables. But there’s a catch: method chaining and column assignment don’t always play nicely together.

Today, I wanted to discuss that tension and what we can do about it as well as my passing thoughts on the recent pandas.DataFrame.select proposal.

Let’s start with the basics: Selecting columns in pandas is done using the square bracket syntax, and depending on what you pass in whether that is a single column name or a list of names, you’ll get back either a Series or a DataFrame. This behavior forms the foundation for how most people interact with pandas.

import pandas as pd

df = pd.DataFrame({'a': [1,2,3], 'b': [4,5,6], 'c': [7, 8, 9]})

display(

df['a'], # Singular column returning a Series

df[['b']], # Singular column returning a DataFrame

df[['a', 'b']], # Multiple columns returning a DataFrame

)0 1

1 2

2 3

Name: a, dtype: int64| b | |

|---|---|

| 0 | 4 |

| 1 | 5 |

| 2 | 6 |

| a | b | |

|---|---|---|

| 0 | 1 | 4 |

| 1 | 2 | 5 |

| 2 | 3 | 6 |

Then, if we want to modify a column or a group of columns, you can transform them directly by using a combination of operators and available methods.

display(

df['a'] ** 2, # Singular column returning a Series

df[['b']] ** 2, # Singular column returning a DataFrame

df[['a', 'b']] ** 2, # Multiple columns returning a DataFrame

)0 1

1 4

2 9

Name: a, dtype: int64| b | |

|---|---|

| 0 | 16 |

| 1 | 25 |

| 2 | 36 |

| a | b | |

|---|---|---|

| 0 | 1 | 16 |

| 1 | 4 | 25 |

| 2 | 9 | 36 |

Note that the original DataFrame does not preserve these transformations. If you want to create a new column or update an existing column, you will need to assign back to it using Python’s __setitem__ syntax (df[…] = …; assigning back to a set of square brackets invoked on an object).

df['d'] = df['a'] ** 2

df[['e', 'f']] = df[['a', 'b']] * -1

print(df) a b c d e f

0 1 4 7 1 -1 -4

1 2 5 8 4 -2 -5

2 3 6 9 9 -3 -6And the pandas userbase was satisfied with this solution (many years ago). It follows a concise and well-defined syntax and can be used to add/update new/existing columns.

The Rise of Chaining Syntax

However, a popular style for writing pandas code is to use a "method chaining" approach, which is visually similar to R’s pipe syntax. Passing callables to .loc and a few other DataFrame methods was added in late 2015 and its implementation was debated for a while afterwards as some felt that passing lambda functions was a bit too verbose. I personally encountered the method chaining style of writing pandas code in Tom Augspurger’s Modern pandas blog post series around that same time. If you haven't read the entries in this blog post, I still consider it to be some of the highest quality pandas material so you should definitely check it out.

The intention of method chaining is to reduce the volume of syntax one needs to write (and read) when working with a pandas.DataFrame. Take a look at the following query, where all we do is filter and sort:

df = pd.DataFrame({

'a': [1, 2, 3, 4, 5], 'b': [6, 7, 8, 9, 10], 'c': [*'XxYyz'],

})

print("Original DataFrame:")

display(df)

filtered_df = df.loc[(df['a'] >= 3) & (df['c'].str.casefold() == 'y'), :].copy()

sorted_filtered_df = filtered_df.sort_values(by='a', ascending=False)

first_sorted_filtered_df = sorted_filtered_df.head(1)

no_c_first_sorted_filtered_df = first_sorted_filtered_df.drop(columns='c')

print("Transformed DataFrame:")

display(no_c_first_sorted_filtered_df)Original DataFrame:| a | b | c | |

|---|---|---|---|

| 0 | 1 | 6 | X |

| 1 | 2 | 7 | x |

| 2 | 3 | 8 | Y |

| 3 | 4 | 9 | y |

| 4 | 5 | 10 | z |

Transformed DataFrame:| a | b | |

|---|---|---|

| 3 | 4 | 9 |

The oddly long variable names we define on each line visually obscure the intent of the code. Instead, if we rewrite this using method chaining, we can eliminate the need for these temporary variables allowing the code to becomes much more skimmable since we reduce the amount of visual noise. This allows us better focus on the anticipated operations happening rather than the boilerplate needed to get there.

df = pd.DataFrame({

'a': [1, 2, 3, 4, 5], 'b': [6, 7, 8, 9, 10], 'c': [*'XxYyz'],

})

print("Original DataFrame:")

display(df)

clean_df = (

df.loc[(df['a'] >= 3) & (df['c'].str.casefold() == 'y'), :].copy()

.sort_values(by='a', ascending=False)

.head(1)

.drop(columns='c')

)

print("Transformed DataFrame:")

display(clean_df)Original DataFrame:| a | b | c | |

|---|---|---|---|

| 0 | 1 | 6 | X |

| 1 | 2 | 7 | x |

| 2 | 3 | 8 | Y |

| 3 | 4 | 9 | y |

| 4 | 5 | 10 | z |

Transformed DataFrame:| a | b | |

|---|---|---|

| 3 | 4 | 9 |

For a direct comparison of the syntax, you can already see the benefits of not having to repeatedly create new variable names for the resultant operation.

# before (no method chaining)

filtered_df = df.loc[(df['a'] >= 3) & (df['c'].str.casefold() == 'y'), :].copy()

sorted_filtered_df = filtered_df.sort_values(by='a', ascending=False)

first_sorted_filtered_df = sorted_filtered_df.head(1)

no_c_first_sorted_filtered_df = first_sorted_filtered_df.drop(columns='c')

# after (method chaining)

no_c_first_sorted_filtered_df = (

df.loc[(df['a'] >= 3) & (df['c'].str.casefold() == 'y'), :].copy()

.sort_values(by='a', ascending=False)

.head(1)

.drop(columns='c')

)Where Chaining Breaks Down

So far, we’ve seen how method chaining can help us avoid clutter and keep transformations legible. But this style starts to fray when we need to modify columns mid-chain, especially using the familiar df['new_col'] = … pattern (note that this is also true for our .loc[…] syntax as well).

At first glance, it might seem like we can just drop an assignment into the middle of a chain. But try it, and things fall apart pretty quickly:

# Not Valid Python; This raises a SyntaxError

(

df.loc[(df['a'] >= 3) & (df['c'].str.casefold() == 'y'), :].copy()

.sort_values(by='a', ascending=False)

['d'] = ???['a'] ** 2

['b_norm'] = (???['b'] - ???['b'].mean()) / ???['b'].std()

.head(1)

.drop(columns='c')

)The problem here is twofold:

The syntax is invalid Python syntax. Meaning no matter what we do, this will never work.

The values denoted by ??? do not exist. We have no way to refer to "the DataFrame in its current state, after it has been filtered and sorted".

We can easily resolve this issue by breaking our method chain.

tmp_df = (

df.loc[(df['a'] >= 3) & (df['c'].str.casefold() == 'y'), :].copy()

.sort_values(by='a', ascending=False)

)

tmp_df['d'] = tmp_df['a'] ** 2

tmp_df['b_norm'] = (tmp_df['b'] - tmp_df['b'].mean()) / tmp_df['b'].std()

clean_df = tmp_df.head(1).drop(columns='c')

clean_df| a | b | d | b_norm | |

|---|---|---|---|---|

| 3 | 4 | 9 | 16 | 0.707107 |

Now our operations are scattered across two variables, which has a small impact on readability. In order to restore our method chaining, we can use pandas.DataFrame.assign, where we have a small trick: instead of referring directly to an existant DataFrame we can pass a function that accepts a DataFrame object as its argument. pandas will pass the current state of the DataFrame (in this case the filtered & sorted DataFrame) to this function so that it can be acted on.

clean_df = (

df.loc[(df['a'] >= 3) & (df['c'].str.casefold() == 'y'), :].copy()

.sort_values(by='a', ascending=False)

.assign(

d=lambda df_: df_['a'] ** 2,

b_norm=lambda df_: (df_['b'] - df_['b'].mean()) / df_['b'].std(),

)

.head(1)

.drop(columns='c')

)

clean_df| a | b | d | b_norm | |

|---|---|---|---|---|

| 3 | 4 | 9 | 16 | 0.707107 |

If you want to select some existing columns you can use __getitem__ syntax. Alternatively, if you want to add/modify columns, you can use the .assign method. However, this dichotomy is fairly artificial because we often will want to subselect some existing columns and transform others, and the syntax we just highlighted is not fit to express this idea coherently.

In the following DataFrame, our goal will be to subselect column 'a' and to also transform 'c' to have its values all transformed to upper-case.

import pandas as pd

df = pd.DataFrame({

'a': [1, 2, 3, 4, 5], 'b': [6, 7, 8, 9, 10], 'c': [*'XxYyz'],

})

new_df = (

df[['a', 'c']] # subselect columns 'a' and 'c'

.assign( # update column 'c'

c=lambda df_: df_['c'].str.upper()

)

)

print(new_df) a c

0 1 X

1 2 X

2 3 Y

3 4 Y

4 5 ZBut now that we’re being repetitive, we first need to add a column THEN subset AND include the newly transformed column. In SQL, we have a less verbose syntax for this common operation.

import duckdb

duckdb.sql('''

from df

select a, upper(c)

''')┌───────┬──────────┐

│ a │ upper(c) │

│ int64 │ varchar │

├───────┼──────────┤

│ 1 │ X │

│ 2 │ X │

│ 3 │ Y │

│ 4 │ Y │

│ 5 │ Z │

└───────┴──────────┘import polars as pl

(

pl.from_pandas(df)

.select(

'a',

pl.col('c').str.to_uppercase() # comparative to `.assign(c=lambda df_:…)`

)

)| a | c |

|---|---|

| i64 | str |

| 1 | "X" |

| 2 | "X" |

| 3 | "Y" |

| 4 | "Y" |

| 5 | "Z" |

Whereas Polars relies Expresssions to select columns, pandas uses lambda functions. My largest complaint about pandas here is that not all pandas.DataFrame methods are equal here, many methods accept a callable argument to pass the current DataFrame but some do not. Whereas in Polars, the Expressions are treated as first-class citizens since the API was designed around it. As we discussed in pandas, the use of passing callables was added post hoc in order to reduce the use of temporary variables and enable users to write more concise code.

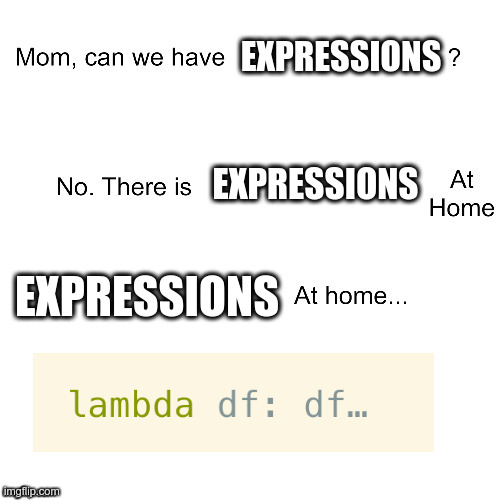

So for those who wish that pandas had an expression interface, make sure you're not missing out on passing callables around. Many pandas.DataFrame methods like .loc for row-wise filtering, .assign for column addition/modification allow you to pass a callable and preserve your method chaining syntax. However, a true expression interface is going to be infinitely more flexible which is why I refer to passing callables in pandas as the "Expressions we have at home"

Can pandas Support "Expressions"?

As with most things in Python, I would always argue "yes, it can be done." In my opinion, being able to select and modify columns at the same time is an incredibly common operation, but I also feel that the slight contortion we use to write pandas code is not that bad (e.g. assignment and subselecting the desired columns). That said, I am personally against adding a pandas.Expression style API, as I find that passing callables is already satisfactory. Do we need to write the text lambda many times? Yes, but at the same time with an Expression interface you'll need to also have some boilerplate there (e.g. Polars has pl.col which by my count has an equivalent number of characters as lambda). At the same time, when pandas doesn't expose a callable interface (looking at you .set_index) one can always fall back to capturing DataFrame state via .pipe or one can always create a temporary variable in to placate pandas syntax. Let's not act like either of these options (.pipe or temporary variable creation) is the end of the world when it comes to writing pandas code.

lambda: The Expressions At Home

So what would it look like if we don't bend over backwards to write a complex pandas expression API, and instead enhance the support for passing callables? Well there is a small fact that many users tend to overlook, and that is the fact that we have a broader programming language here to use for our convenience. If you’re working in a team or even just hopping between projects, you’ve probably written helper functions to smooth over these rough edges or to streamline some common operations in your role. So I figured: why not formalize that pattern a bit?

With that in mind, I decided to take my own attempt at adding a .select like interface to pandas and this is what I came up with. Including imports, the implementation itself is 16 lines of code. Since pandas has no concept of an Expression, I reached for the familiar callable passing to maintain pandas' current syntax (also this meant that I wouldn’t need to write any custom evaluation code).

from typing import Callable

import pandas as pd

INDEX_TYPES = (str, list, tuple, pd.Index, pd.MultiIndex)

@pd.api.extensions.register_dataframe_accessor("select")

class Select:

def __init__(self, df: pd.DataFrame):

self.df = df

def __call__(

self,

*funcs: str | Callable[pd.DataFrame, pd.DataFrame | pd.Series],

):

_funcs = (

(lambda df, x=x: df.loc[:, x]) if isinstance(x, INDEX_TYPES) else x

for x in funcs

)

return pd.concat([f(self.df) for f in _funcs], axis=1)

df = pd.DataFrame({'a': [1,2,3], 'b': [4,5,6]})

df.select(

'a', # carry forward an existing coliumn

lambda df: df['b'].pow(2).rename('c'),

lambda df: df[['a', 'b']].add(20).add_prefix('add_'),

)| a | c | add_a | add_b | |

|---|---|---|---|---|

| 0 | 1 | 16 | 21 | 24 |

| 1 | 2 | 25 | 22 | 25 |

| 2 | 3 | 36 | 23 | 26 |

The feature-set I present here is slightly different from the ENH: Implement DataFrame.select Pull Request in that I support passing callables to dynamically create new columns and use *args (which I agree departs significantly from other interfaces in pandas).

This gives us a flexible interface for mixing column selection and transformation in a single method call just like we can do in Polars and SQL.

Each argument passed to .select() can be either:

a string or list of column names (to select columns directly), or

a callable that takes the full DataFrame and returns a new Series or DataFrame (to transform or derive new columns).

Under the hood, the accessor treats each argument as a function and applies it to the original DataFrame. The results are then concatenated side-by-side, similar to how polars.DataFrame.select(…) works.

import polars as pl

from polars import col

pl_df = pl.from_pandas(df)

pl_df.select( # incredibly similar to the above pandas code!

'a',

pl.col('b').pow(2).alias('c'),

pl.col('a', 'b').add(20).name.prefix('add_')

)| a | c | add_a | add_b |

|---|---|---|---|

| i64 | i64 | i64 | i64 |

| 1 | 16 | 21 | 24 |

| 2 | 25 | 22 | 25 |

| 3 | 36 | 23 | 26 |

Then if we want to extend this to also mimic the .with_columns method in Polars, we can easily reuse the above code via inheritance:

@pd.api.extensions.register_dataframe_accessor("with_columns")

class WithColumns(Select):

def __call__(self, *funcs: str | Callable[pd.DataFrame, pd.DataFrame | pd.Series]):

results = super().__call__(*funcs)

# just pop in the current dataframe before concatenating the results

return pd.concat([self.df, results], axis=1)

df.with_columns(

lambda df: df['b'].pow(2).rename('c'),

lambda df: df[['a', 'b']].add(20).add_prefix('add_'),

)| a | b | c | add_a | add_b | |

|---|---|---|---|---|---|

| 0 | 1 | 4 | 16 | 21 | 24 |

| 1 | 2 | 5 | 25 | 22 | 25 |

| 2 | 3 | 6 | 36 | 23 | 26 |

# Equivalent Polars code for comparison

pl_df.with_columns(

pl.col('b').pow(2).alias('c'),

pl.col('a', 'b').add(20).name.prefix('add_')

)| a | b | c | add_a | add_b |

|---|---|---|---|---|

| i64 | i64 | i64 | i64 | i64 |

| 1 | 4 | 16 | 21 | 24 |

| 2 | 5 | 25 | 22 | 25 |

| 3 | 6 | 36 | 23 | 26 |

Wrap Up

And there you have it, a bit of pandas history and evolution of syntax as well as a very brief comparison against the Polars Expression interface. While the general callables and expressions are very different implementations, the way that Polars uses its Expressions API is similar to how we can pass callables around in pandas. There is much more to discuss on this topic, because we haven’t begun discussing how these interfaces are different from one another. More on that next week! Talk to you then.

What do you think so far? Let me know on the DUTC Discord server!